Using Dify with LangBot

Dify is an open-source large language model (LLM) application development platform. It combines the concepts of Backend as Service and LLMOps, enabling developers to quickly build production-grade generative AI applications.

Dify can create chat assistants (including Chatflow), Agents, text generation applications, workflows, and other types of applications.

LangBot currently supports three types of Dify applications: Chat Assistant (including Chatflow), Agent, and Workflow.

Creating an Application in Dify

Please follow the Dify documentation to deploy Dify and create your application.

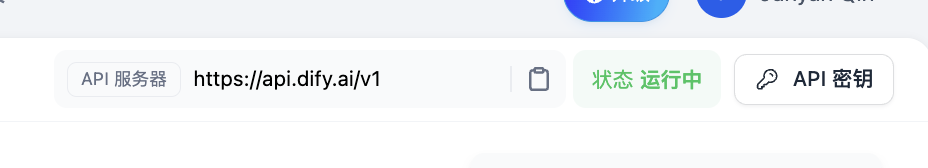

After publishing your application, go to the Access API page of your application to generate an API key.

Save the API server and API key for configuring LangBot's pipeline AI Capability.

INFO

The above is an example for Dify cloud service version. If you are using the self-hosted community version, please use your own Dify service address as the base-url in LangBot, and add /v1 as the path.

- If LangBot and Dify are deployed on the same host and both are deployed using Docker, you can refer to the article: Network Configuration Details. In this case, add

langbot-networkto thenetworksof all containers in the docker-compose.yaml file that starts Dify, add the container namedify-nginxto thenginxcontainer, and finally setbase-urltohttp://dify-nginx/v1in the LangBot configuration. - For other cases, please consult your company's operations team.

Configuring LangBot

Open the LangBot WebUI page, add a new pipeline or switch to the AI capability configuration page in an existing pipeline.

INFO

Workflow Output Key

If you are using a Dify workflow application, please use summary as the key to pass the output content.

Output Processing

When using a workflow application or Agent application, if you enable track-function-calls in LangBot's pipeline Output Processing, a message of calling function xxx will be output to the user when Dify executes each tool call.

However, if you are using ChatFlow under the chat application (Chat Assistant -> Workflow Orchestration), it will only output the text returned by the Answer (direct reply) node regardless of the settings.