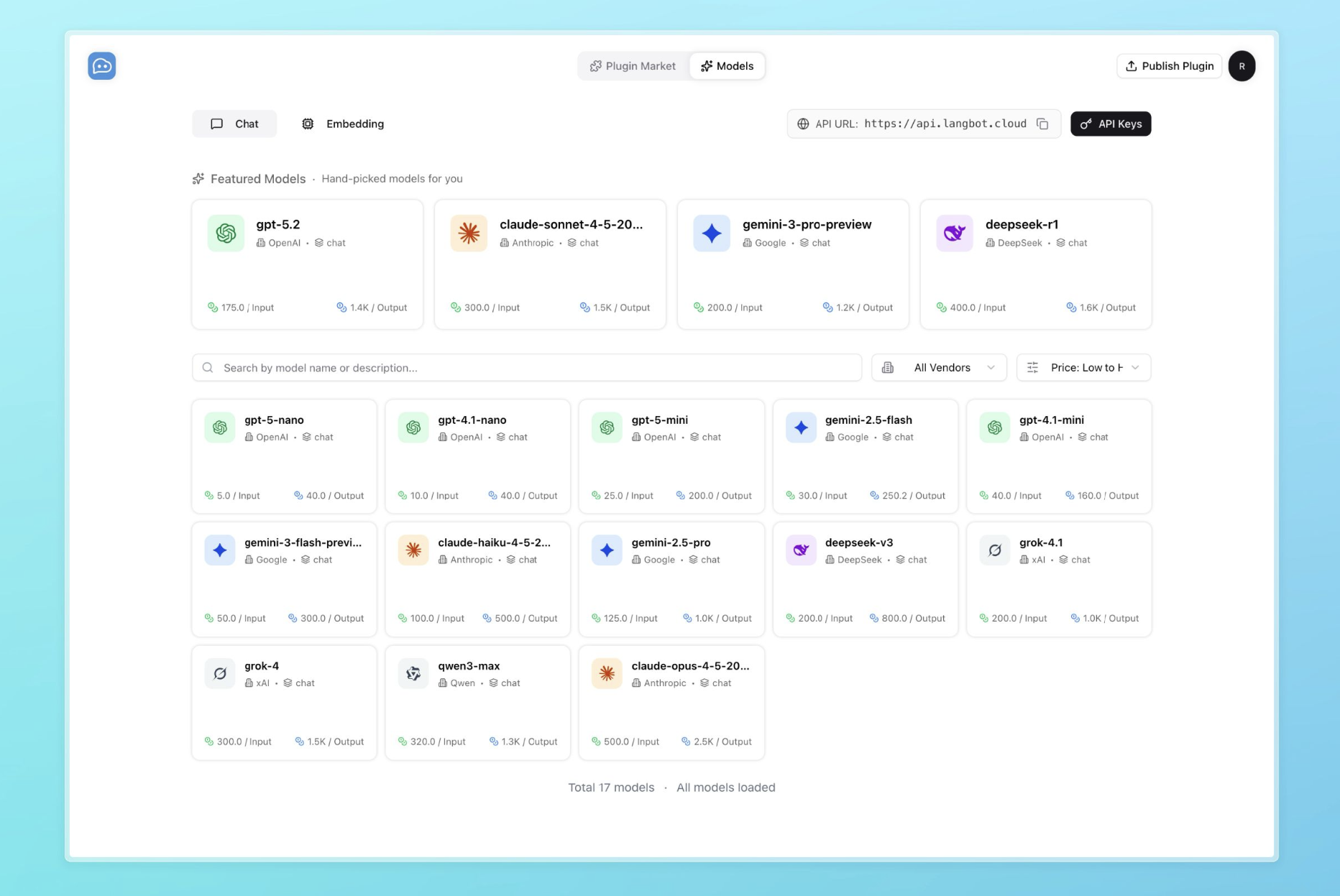

Configure Models (Models)

LangBot Models

LangBot Models is an official model service provided by LangBot. When you initialize a local instance with your LangBot Space account, available models will be automatically added to your instance without any configuration. You will receive a certain amount of free quota to get started quickly.

For specific available models, please check LangBot Space.

Custom Models

You can also add models from other sources.

LLM Model

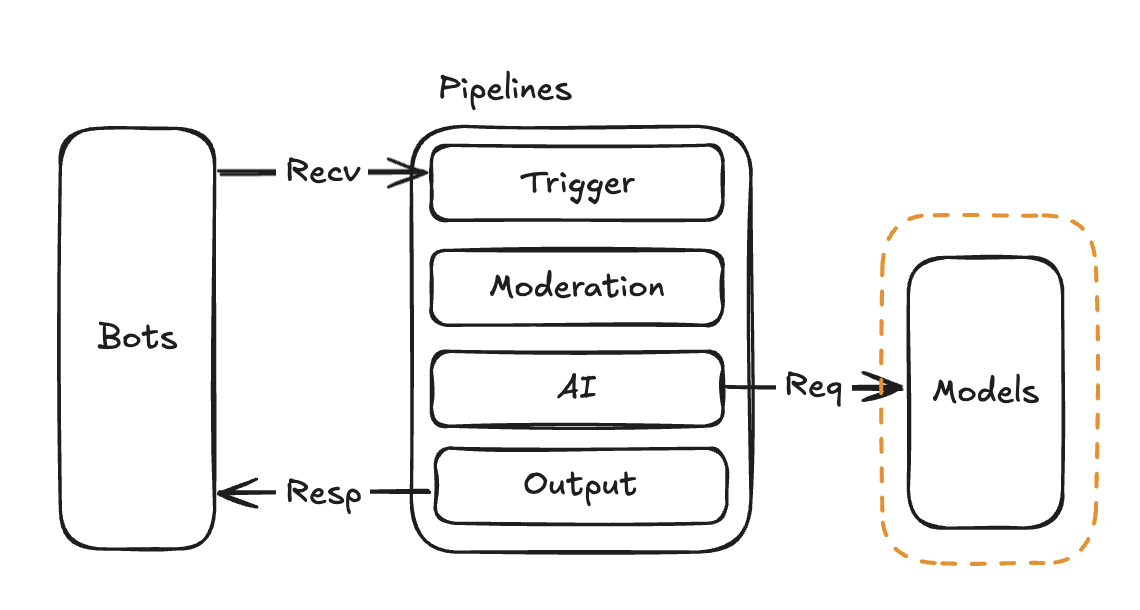

Models will be used by pipelines to process messages. The first model you configure will be set as the model for the default pipeline.

You can add multiple models, and then select which model to use in the pipeline.

Enter these four parameters: Model Name, Model Provider, Request URL, and API Key, then submit.

For model capabilities, please choose according to the specific model characteristics:

Visual Capability: Needs to be enabled to recognize images

Function Calling: Needs to be enabled to use Agent tools in conversations

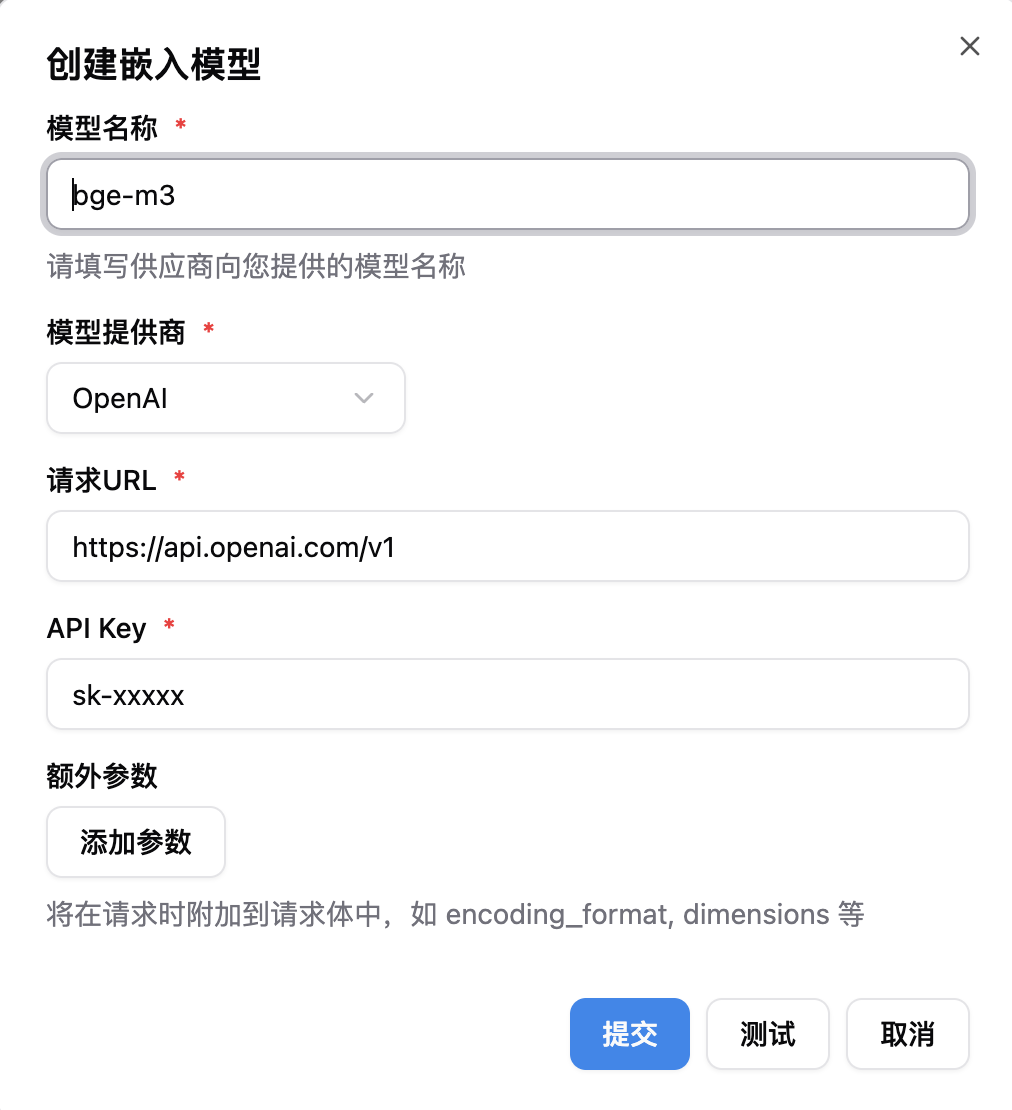

Embedding Model

Embedding models are used to compute vector representations of messages. If you need to use knowledge bases, please configure this model.

Enter these four parameters: Model Name, Model Provider, Request URL, and API Key, then submit. After that, please configure the knowledge base to use this model.

Using seekdb Built-in Embedding Model (Zero Configuration)

The system has integrated the official embedding model provided by seekdb, no parameters required.

- On the "Embedding Model" page, select "seekdb-built-in";

- Click "Save" to use it immediately;

- Then select this model in your knowledge base to take effect.